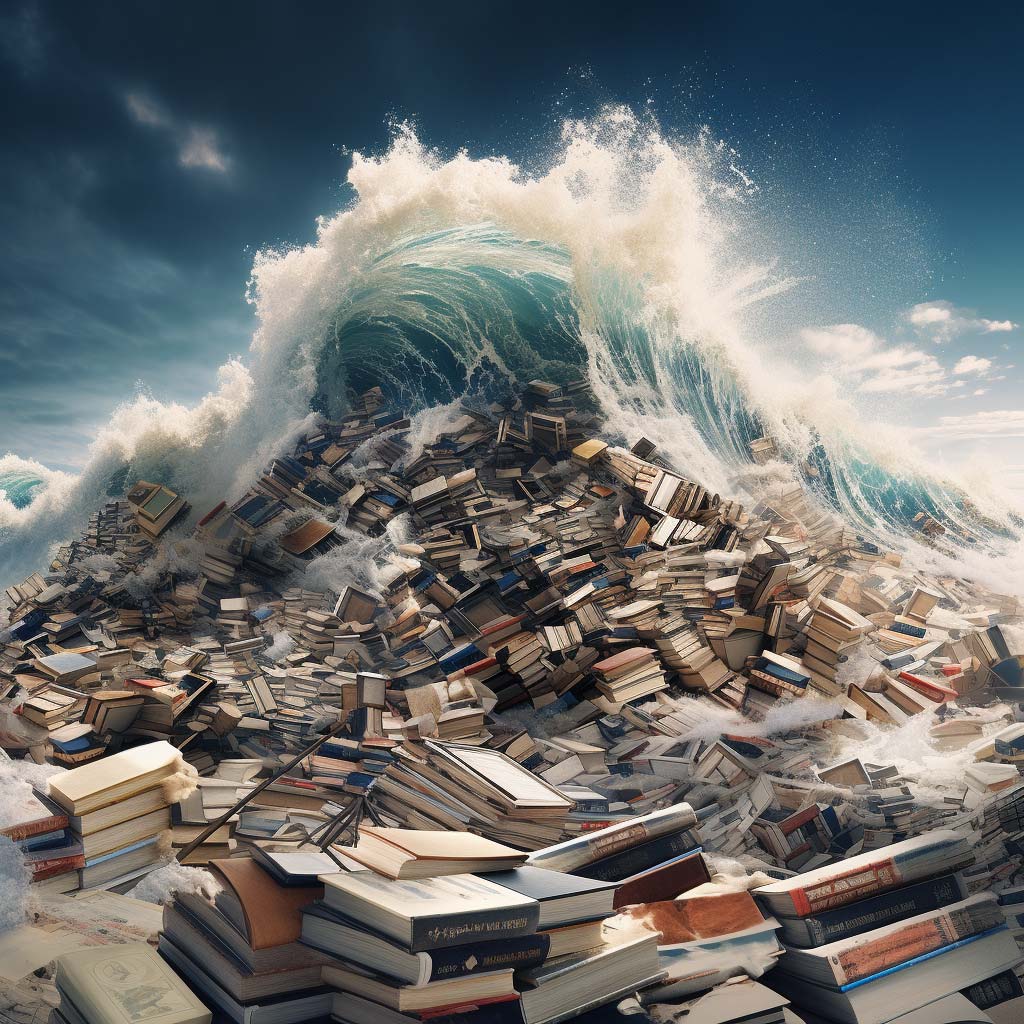

A tsunami of AI-generated books is coming… but are we prepared?

Maybe you’ve already noticed the titles popping up on Amazon. At once generic, perfectly plausible and strangely unnerving, they almost proudly plant their flag in the uncanny valley, and simply hope that a few punters are happy to part with $2.99 to give them a try.

These books took mere hours, potentially minutes to create; a succession of words that are unique, sure, but interesting, even sensical? That’s harder to say.

Self-published books that are completely AI-generated: it’s not the future, it’s the now. So what are the consequences, and what is the industry to do?

The rise of the self-publishing machines

ChatGPT has changed a great many professions in the short time it has graced this earth. Perhaps surprisingly its effects on authors have felt less pronounced than one might have expected of an AI that is really good at writing, at least when compared to the changes felt by the likes of coders and online marketers.

But thanks to self-publishing, the uncut, uncoloured, unedited work of generative AI is beginning to creep into the book world: type some prompts, hit enter, slap on a cover, meet your costs by your third sale.

It’s a situation with one winner – the “author” of the AI-generated book – and many losers: the buyer of the book who wastes their hard-earned money on a subpar novel, the seller (Amazon) whose colours are lowered by facilitating the transaction, and, perhaps most importantly, the human authors who now find themselves on a greatly changed and rather unfair playing field.

A battle of quality versus quantity

Historically the self-publishing world has been a difficult one to spam and scam. Books took time, even the self-help or cheap thrill junk that was written fast to sell fast. If you weren’t willing to spend the requisite amount of time, to follow due process, to project some semblance of quality and authority in your work, there simply wasn’t much point in bothering.

If you were writing for the money, not the love, you wouldn’t often be happy with the returns on what was often a significant investment, if not of money, of time.

But in generative AI like ChatGPT, spammers and scammers have what they see as the perfect get rich quick tool for the self-publishing industry. They can bang out a few prompts and instantly generate up to 25,000 largely coherent words (the processing limit of GPT4). Two queries and you’ve got a novel the length of The Great Gatsby.

Ensconcing the AI-generated words in some AI-generated art, you’ll have an average (and possibly nonsensical) book in a possibly eye-catching (but decidedly amateur) cover.

It doesn’t sound like a runaway success, and that’s because it doesn’t have to be. This thing was made in next to no time for next to no dollars. If the author charges a couple of bucks per unit, they only have to sell a handful of copies to break even.

Take Frank White (a pseudonym), the esteemed author of the page-turning novella Galactic Pimp: Vol. 1. Frank used ChatGPT to write (and publish) this tome in less than a day, offering it through Amazon for just $1. As he explained on a YouTube stream later, he could comfortably create 300 such books in a year. If just a handful experience even a mild amount of success, he starts dealing in pure profit.

Human authors were always steered by quality, because that’s ultimately what made money. AI-generated books are changing that reality. If you can generate a lot of material quickly, you can scam and spam your way to profit.

And the worry is that at some point, the quantity may begin to drown the quality out.

Certification: a potential solution

Amazon doesn’t require its self-published authors to publicly disclose whether they’ve used generative AI to write their book. At this point their stance seems to be that quality will rise to the top, and they are happy to let the market decide (the tried and true strategy of sitting on corporate hands).

Would shoppers be as willing to part with their money if they knew a book was entirely generated by AI? Without data it’s hard to say, but in terms of gut feel it’s an obvious no.

The issue is that there’s no real incentive for a self-publishing spammer to disclose what they’re doing. But there’s another way: create a system that certifies human-produced material, and that human authors will be excited to use.

What might this certification look like? Well, imagine:

- An initial process where you upload your human-written manuscript to a web application, and follow a few checks to confirm you wrote that manuscript.

- A secondary set of very human certification steps designed to check that all is above board.

- A clear label, complete with QR code for context, on any book proven, to a certain benchmark and beyond a reasonable doubt, to be written by a human.

With such a system, a human author could truly separate themselves from the AI crowd. They could charge their human premium by proving the book’s worth, as readers would be reassured that the money they spend is a fair reflection of the blood, sweat, love and tears that were expended on the book. Readers can shop exclusively for human-produced stories, filtering the bot-generated content out of their searches.

Such a system exists. It’s called Authortegrity. And there’s an Alpha release available for testing.